Πρόσφατα σε μια είδησή του το cnn.gr μας … διηγήθηκε ότι «Ένα ρομπότ κατέρρευσε μετά από 20 ώρες συνεχούς εργασίας. Το έργο του ήταν να μετακινεί βαριές παλέτες εμπορευμάτων στην αποθήκη» Και έτρεξαν να το …βοηθήσουν εργαζόμενοι (άνθρωποι εννοείται όχι «συνάδελφοι» ρομπότ) αλλά το ρομπότ «είχε υπερθερμανθεί και υπέστη καταστροφική βλάβη». Καρδιακή προσβολή!

Ποια η διαφορά δηλαδή από ένα αυτοκίνητο που χαλάει ή ένα πλυντήριο και χρειάζεται είτε πέταμα και αγορά άλλου είτε αν μπορεί να διορθωθεί διορθώνεται.

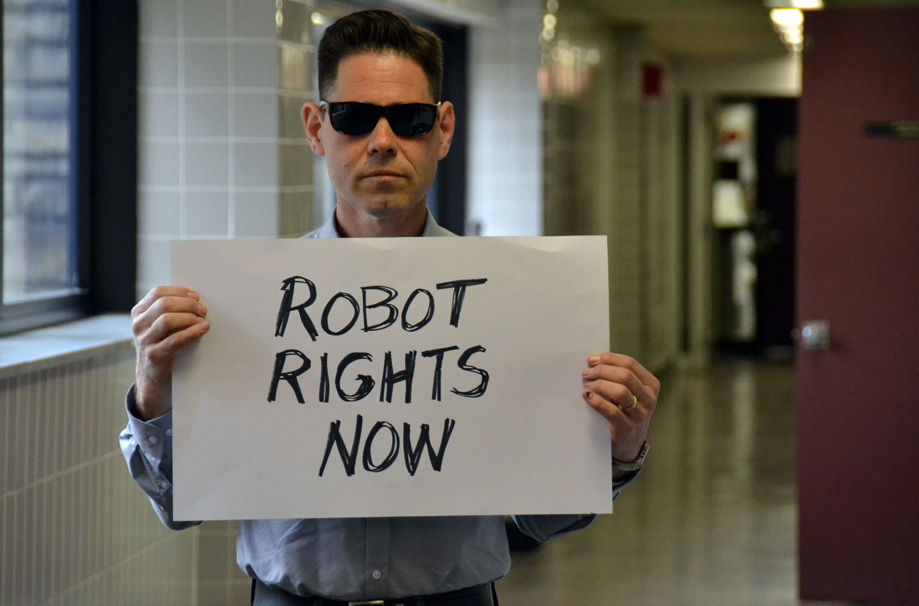

Αλλά ποιο άραγε το μήνυμα εδώ εν καιρώ που προσπαθούν να πουλήσουν την επικίνδυνη τεχνητή νοημοσύνη; Ότι τα ρομπότ μοιάζουν με τους ανθρώπους και μπορούν να καταρρεύσουν; Προσπαθούν δηλαδή να εξισώσουν τα ρομπότ με τους ανθρώπους και τα… δικαιώματά μας! Σε λίγο μην εκπλαγείτε να μας πουλάνε και… δικαιώματα των ρομπότ/τεχνητής νοημοσύνης!!

Βρισκόμαστε δυστυχώς εν έτη 2023 σε ένα κόσμο επικίνδυνα τρελό και τρομακτικό όπου η εξυπνάδα του ανθρώπου εύχομαι να μην ανταμώσει την αυτοκαταστροφή του…

Φανούλα Αργυρού

Δημοσιεύτηκε στη Σημερινή Κύπρου

WSJ: Should Robots With Artificial Intelligence Have Moral or Legal Rights?

As artificial intelligence continues to advance, researchers, scholars and ethicists consider whether robots deserve to be treated more like people

By Daniel Akst April 10, 2023

Last year a software engineer at Google made an unusual assertion: that an artificial-intelligence chatbot developed at the company had become sentient, was entitled to rights as a person and might even have a soul. After what the company called a “lengthy engagement” with the employee on the issue, Google fired him.

It’s unlikely this will be the last such episode. Artificial intelligence is writing essays, winning at chess, detecting likely cancers and making business decisions. That’s just the beginning for a technology that will only grow more powerful and pervasive, bolstering longstanding worries that robots might someday overtake us.

Yet far less attention has been paid to how we should treat these new forms of intelligence, some of which will be embodied in increasingly anthropomorphic forms. Might we eventually owe them some kind of moral or legal rights? Might we feel we should treat them like people if they look and act the part?

Answering those questions will force society to address profound social, ethical and legal quandaries. What exactly is it that entitles a being to rights, and what kind of rights should those be? Are there helpful parallels in the human relationship with animals? Will the synthetic minds of tomorrow, quite possibly destined to surpass human intelligence, someday be entitled to vote or to marry? If they make an articulate demand for such rights, will anyone be in a position to say no?

These concerns might seem far-fetched. But the robot invasion is already well under way, and the question of rights for these soon-to-be-ubiquitous artificial forms of intelligence has gained urgency from the sudden prominence of ChatGPT and the AI-powered new form of Bing, Microsoft’s search engine, both of which have astounded with their sophisticated responses to user questions.

“We need to think about this right now,” says David J. Gunkel, author of the 2018 book “Robot Rights” and other works on the subject. Citing the rapid spread of AI and its fast-growing capabilities, he adds: “We are already in this territory.”

‘Moral disaster’

In fact, legal scholars, philosophers and roboticists have been debating these questions for years—and the general thrust of these discussions is that if and when artificial intelligence reaches some sufficiently advanced threshold, rights of some kind must follow. “It would be a moral disaster,” the philosophers Eric Schwitzgebel and Mara Garza wrote in a 2015 paper, “if our future society constructed large numbers of human-grade AIs, as self-aware as we are, as anxious about their future, and as capable of joy and suffering, simply to torture, enslave and kill them for trivial reasons.”

The primary basis for this view is the prospect that robots may achieve something like sentience—the ability to perceive and feel. The difficult question is whether artificial beings will ever get there, or if at most they can only emulate consciousness, as they seem to be doing now.

Some people consider appearances to be evidence enough, arguing that this is the only evidence we have for sentience in humans. The mathematician Alan Turing famously held that if a computer acts like a sentient being, then we ought to consider it one. Mr. Turing’s British countryman, the computer pioneer and chess expert David Levy, agrees but goes further. As a result of machine learning, he says, artificial intelligence that at first merely mimics human sentiment “might learn new feelings, new emotions that we’ve never encountered, or that we’ve never described.”

SHARE YOUR THOUGHTS

What do you think about robot rights? Join the conversation below.

If robots get any rights at all, Dr. Gunkel says, first to come will be basic protections of the kind that exist in many places against cruelty toward animals. Like animals, robots could be seen as what philosophers call “moral patients,” or beings worthy of moral consideration whether or not they can fulfill the duties and responsibilities of “moral agents.” Your dog is a moral patient, and so are you. But you are also a moral agent—someone who is able to tell right from wrong and act accordingly.

Of course, living animals are conscious, whereas artificial forms of intelligence exist as lines of code, even if embodied in the kind of machines we call robots. Nonetheless, people might recoil from violence, bullying or abusive language toward familiar and conscious-seeming beings out of sympathy, or for fear that such behavior will coarsen the rest of us.

That’s a second argument for robot rights. MIT roboticist Kate Darling suggests that preventing abuse of robots that interact with people on a social level—and evoke anthropomorphic responses—may help humans avoid becoming desensitized about violence toward one another. Kant, she observes, said that “he who is cruel to animals becomes hard also in his dealings with men.”

In an online survey, the computer scientist Gabriel Lima and colleagues found that although respondents “mainly disfavor AI and robot rights” such as rights to privacy, payment for work, free speech and to sue or be sued, “they are supportive of protecting electronic agents from cruelty.” Another survey, led by Maartje M.A. De Graaf, a specialist in human-robot interactions, found that “people are more willing to grant basic robot rights such as access to energy and the right to update” compared with “sociopolitical rights such as voting rights and the right to own property.”

Autonomous action

The case for robot rights will probably grow stronger as artificial intelligence gains in sophistication. That leads to a third argument for rights, which is that robots will increasingly be capable of autonomous action, and potentially both be responsible for their behavior and entitled to due process. At that point, robots would be moral agents—and might well make the case that they are entitled to commensurate rights and privileges, including owning wealth, entering into legal agreements and even casting ballots. Some people foresee a sort of citizenship, too.

“Any self-aware robot that speaks a known language and is able to recognize moral alternatives, and thus make moral choices,” Mr. Levy contends, “should be considered a worthy ‘robot person’ in our society. If that is so, should they not also possess the rights and duties of all citizens?”

Some experts foresee the rise of a new branch of law both protecting robots and holding them to account. There is already some theorizing on whether robots should be held legally liable when they do something wrong, and on methods of suitable punishment. And then there is the status of corporations, which offers a precedent for treating nonhuman entities as people under the law. In his book “The Reasonable Robot,” law professor and physician Ryan Abbott argues that, to encourage the use of AI for innovation, the law shouldn’t discriminate between the behavior of artificial intelligence and that of humans. Dr. Abbott was involved in obtaining, from South Africa, what appears to be the world’s first patent listing AI as the inventor (if not the patent owner).

An even more self-interested argument for robot rights is that if we are nice to them, they might be nice to us when they take over. Seeing ourselves as part of a harmonious moral ecosystem, rather than as possessors of dominion over the Earth, “preserves space for us in a world that may hypothetically contain something that is more powerful than we are,” says Brian Christian, whose bestselling books explore how humans and computers are changing one another.

Humanity devalued

Still, many disagree with the idea that artificial intelligence should have rights or personhood, especially if the basis is some presumed sentience in these new beings. Sentience is notoriously hard to define, and if it exists in a robot, a human presumably put it there—which critics like Joanna J. Bryson, a scholar specializing in ethics and technology, insist humans shouldn’t do.

Dr. Bryson argues in a paper with the provocative title “Robots Should Be Slaves” that “it would be wrong to let people think that their robots are persons,” and what’s more, it would be wrong to build robots we owed personhood to because it would only further devalue humanity. Robots, according to this view, are mere devices akin to sophisticated toasters. “No one,” she writes, “should ever need to hesitate an instant in deciding whether to save a human or a robot from a burning building.”

MIT professor Sherry Turkle, who studies the sociology of technology, also recoils from the prospect that robots might be considered persons, which she fears would undermine the specialness of humans. Robotic caregivers may offer some comfort to people in nursing homes, for example, but to Dr. Turkle they are little more than a cheap expedient, inadequate to the task of providing human warmth and understanding. Robots, she says, aren’t “moral creatures. They’re intelligent performative objects.”

Yet she acknowledges that when people deal with robots, we tend to treat them as sentient even if they aren’t. As a result, she predicts that “there is going to be a kind of new category of object relations that will form around the inanimate—new rules, and new norms.”

Dr. Darling, the MIT roboticist, demonstrated the kind of norms likely to arise in our robotic future by conducting a workshop in which participants played with Pleos, “cute robotic dinosaurs that are roughly the size of small cats.” When, after playtime, the humans were asked to tie up and kill the creatures, “drama ensued, with many of the participants refusing to ‘hurt’ the robots, and even physically protecting them,” she says. One removed her Pleo’s battery to “spare it the pain” even though all participants knew the creatures were unfeeling toys.

“It’s all about anthropomorphizing,” Dr. Gunkel says, adding that this isn’t a bug but a feature of humankind. “We project states of mind onto our dogs and cats and computers. This is not something we can shut down.”

Ultimately, the philosopher Sidney Hook observed back in 1959, what will determine how people treat artificially intelligent agents is “whether they look like and behave like other people we know.” If robots approach human function and appearance, if they live among us, caring for us and talking with us, it might be difficult for people to withhold rights. What, after all, will distinguish us from them aside from provenance and chemical composition?

Given adequate capabilities, robots themselves will someday make this case, and Mr. Levy thinks that by then humans, having grown close to their robot helpers, will probably agree. “If a robot has all the appearances of being human,” he says, people will “find it much easier to accept the robot as being sentient, of being worthy of our affections, leading us to accept it as having character and being alive.”

In his book “Love and Sex with Robots,” Mr. Levy says humans will eventually marry robots, just as nowadays they seem to be adopting pets instead of having babies. For intimate relationships between humans and robots to be fulfilling, he says, robots will need considerable personality, sensitivity and even autonomy. They will also have to look and feel to a great extent like you and me, though very likely better. He imagines that romantic partnerships between robots and people will get started in earnest around midcentury.

Source: https://www.wsj.com/articles/robots-ai-legal-rights-3c47ef40